The narrative around Large Language Models (LLMs) has recently shifted from impressive conversational demos to practical, scalable production deployment. This acceleration is largely thanks to a key technical breakthrough: function calling.

For months, the biggest hurdle to enterprise adoption of LLMs was their inability to reliably and programmatically interact with the outside world. They could generate beautiful text, but they couldn't, for example, look up a customer's order status in a database, send an email, or query a proprietary stock API. Developers were forced to build complex, brittle logic layers around the LLM to facilitate these actions.

The Game-Changing Mechanism

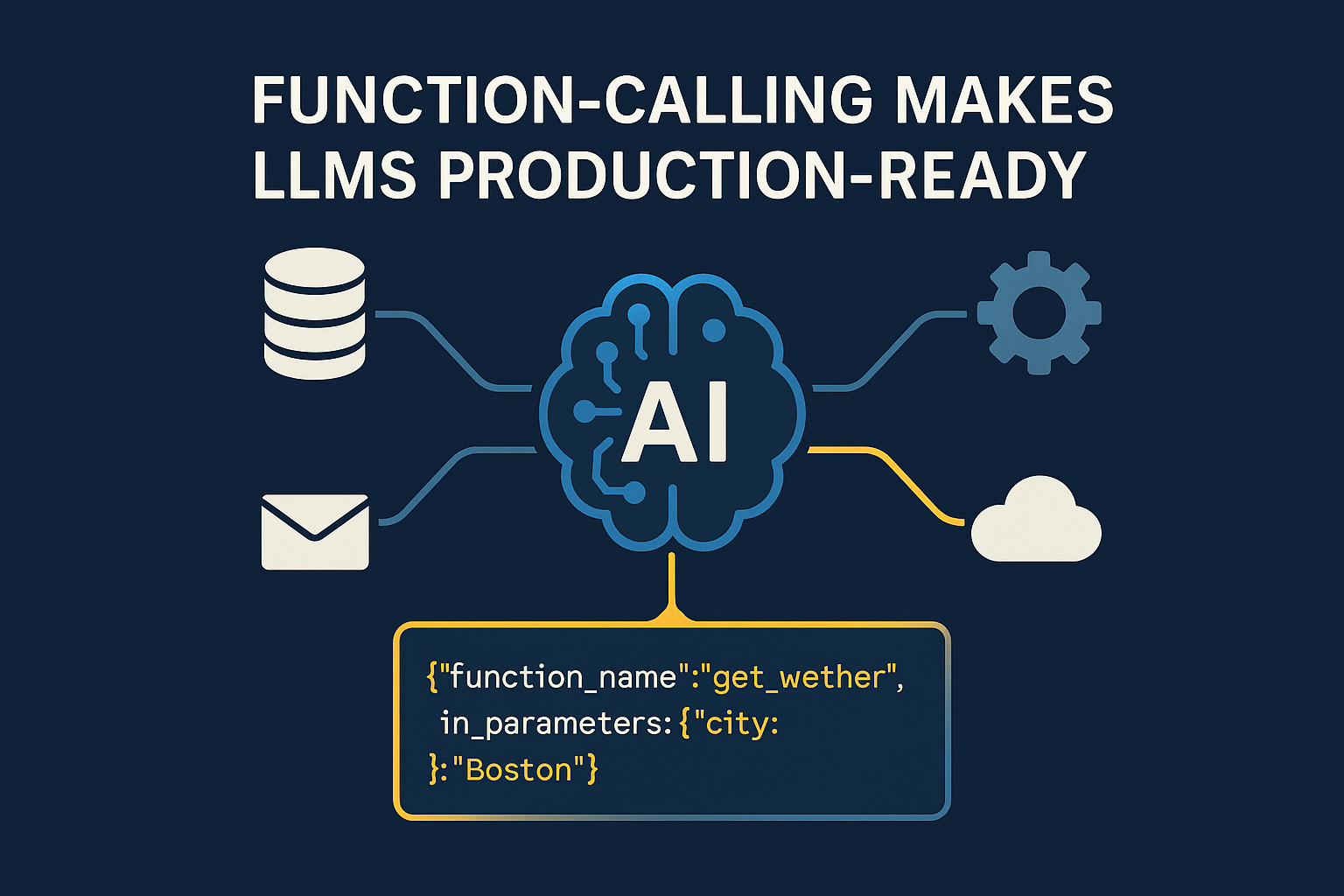

Function calling is not the LLM executing code, but rather the model intelligently deciding which external tool is needed and correctly formatting the necessary input arguments as a structured JSON object.

Here’s the typical workflow:

- Developer Defines Tools: The developer provides the LLM with clear schema definitions (names, descriptions, and required parameters) of available external functions (e.g.,

get_weather(city),book_flight(origin, destination, date)). - User Asks a Question: A user prompts the system with a request, such as "What's the weather like in Boston and then send me a summary email."

- LLM Calls a Function: The LLM doesn't answer the question directly. Instead, it recognizes the need for the external

get_weatherfunction and returns a structured call:{"function_name": "get_weather", "parameters": {"city": "Boston"}}. - System Executes and Informs: The host application (your code) receives this structured call, executes the actual

get_weatherAPI request, and passes the result (the weather data) back to the LLM. - LLM Synthesizes the Final Answer: The LLM uses the real-world data it received to formulate a precise, helpful, and grounded response. It can also now recognize the second step ("send me a summary email") and generate a second function call for the

send_emailtool.

Why This is a Turning Point for Business

This capability elevates the LLM from a text generator to a reasoning engine and workflow orchestrator:

- Enhanced Reliability and Reduced Hallucinations: By grounding the model's output in real, live data from APIs, you drastically reduce the chance of the LLM "hallucinating" incorrect facts or proprietary information.

- Complex Process Automation: For the first time, you can build conversational interfaces that handle multi-step business logic. Imagine an AI agent that can accept a customer request, check inventory, process a refund, and notify the warehouse—all guided by a natural language prompt.

- Tool Agnostic Integration: This approach works with any external tool, database, or legacy system that has an API endpoint. It makes proprietary data suddenly accessible through a natural language interface.

Function calling has rapidly transitioned from a niche feature to a must-have component for any consulting project focused on deploying AI agents into core business operations. If you are not designing your AI applications with a robust function-calling layer, you are already building with a major competitive disadvantage.