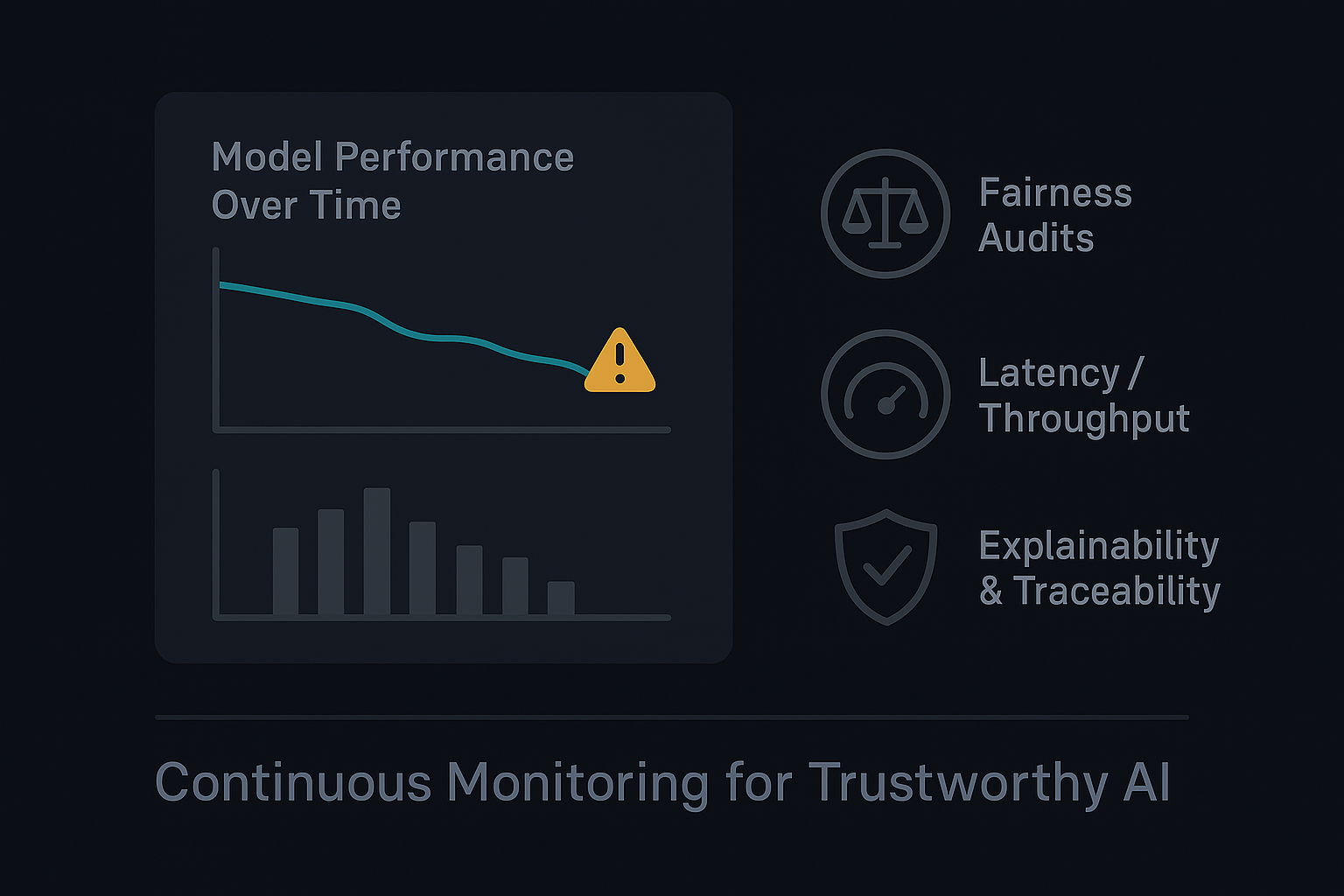

Building Trust in AI: Why Continuous Model Monitoring Matters More Than Ever

In 2024, many organizations rushed to deploy generative AI and predictive systems into production. Yet few invested in ongoing oversight. Continuous model monitoring isn’t just a compliance checkbox—it’s the foundation for maintaining accuracy, fairness, and reliability as data and user behavior evolve.