In the last year, we’ve seen an explosion in the adoption of large language models and AI-driven decision systems across every sector—from finance to healthcare to logistics. But as more of these models moved from prototypes to production, a recurring challenge emerged: maintaining trust once the models were live.

Why Monitoring Can’t Be an Afterthought

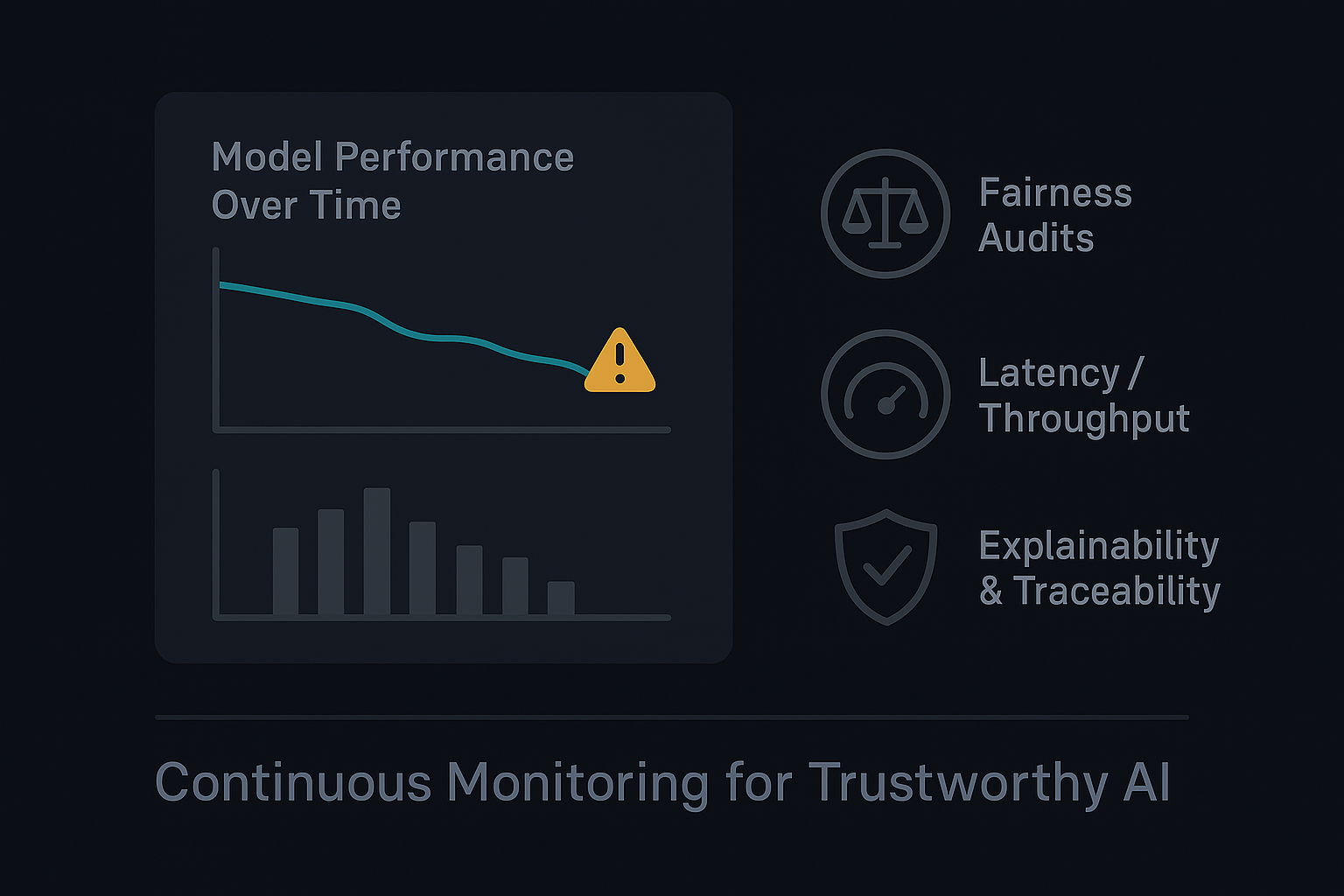

Machine learning models don’t fail overnight—they drift. The data distributions that models were trained on gradually shift as user behavior, market conditions, or input sources change. Even a small drift can have an outsized impact on predictions. Without continuous monitoring, teams often discover problems only after performance or customer trust has already declined.

Monitoring is not limited to accuracy metrics. It includes fairness audits, anomaly detection, latency tracking, and evaluating LLM output for hallucinations or off-brand responses. Establishing a baseline of expected behavior allows teams to set meaningful thresholds for alerts and retraining cycles.

The Rise of AI Governance Frameworks

In 2024, regulatory and ethical pressure increased. Frameworks like NIST’s AI Risk Management Framework and ISO/IEC 42001 started influencing enterprise AI policy. These standards emphasize traceability and lifecycle management—both of which depend on robust monitoring pipelines.

Teams that implemented governance early gained an advantage: they could demonstrate accountability without slowing innovation. Automated lineage tracking and explainability tools made it easier to show not just what a model predicted, but why.

Practical Steps for Implementation

- Establish Monitoring Early – Treat observability as part of your MLOps pipeline, not an afterthought.

- Automate Drift Detection – Integrate statistical monitoring that alerts when feature distributions or output patterns shift.

- Track Real-World Feedback – Incorporate user signals and error reports as part of continuous learning.

- Audit Regularly – Schedule model audits that include ethical and bias reviews alongside performance metrics.

The result isn’t just safer AI—it’s more resilient AI. Teams that invest in monitoring spend less time firefighting and more time improving outcomes.

As we move into 2025, the winners in AI won’t just be those with the largest models or fastest deployments. They’ll be the ones whose systems remain transparent, trustworthy, and stable over time.